The global eCOA (electronic clinical outcome assessment) solutions market was valued at over two billion dollars in 2025, and is projected to expand rapidly over the coming decade, driven by increased clinical trial activity, digital transformation efforts, and the integration of mobile, cloud, and AI-enabled tools for outcome measurement.

This growth reflects not just broader industry digitization, but an evolving expectation: that outcome data should be accurate, audit-ready through validated systems and controlled operational processes, and capable of supporting decentralized workflows.As more sponsors and CROs incorporate eCOA into their trial strategies and regulators continue to emphasize electronic data integrity the stakes of successful implementation have never been higher.

Yet with greater adoption comes greater complexity: pitfalls around site burden, mid-study amendments, device logistics, and training gaps can undermine even the most advanced platforms if not thoughtfully addressed.

The good news? These common challenges can be anticipated and managed with practical, operationally aligned planning turning eCOA from a source of friction into a strategic advantage for trial success.

1) Pitfall: Site burden that quietly snowballs

What it looks like

- Coordinators juggling multiple systems (EDC, eCOA, IRT, eConsent), each with different passwords, roles, and support paths.

- “Extra steps” that weren’t obvious in design: device inventory checks, visit activation, diary compliance troubleshooting, re-training new staff, printing backups, and answering participant tech questions.

- Increased time spent on operations rather than patients.

Sites have been vocal that eCOA can feel disruptive and require specific skills/resources, especially when workflows aren’t simplified or flexible.

How to avoid it

- Design around the site’s day, not the sponsor’s org chart. Map the exact visit flow and identify who does what (and when) for provisioning, reminders, replacements, resyncs, and compliance follow-up.

- Make ownership explicit. Define a RACI that covers device management, account access, visit/diary configuration, data review, and escalation paths (site → CRA → helpdesk → vendor → sponsor).

- Reduce “system switching.” Where possible, align user roles, naming conventions (visit names/timepoints), and support workflows across platforms. Even small harmonization reduces cognitive load.

- Plan for staffing realities. Assume turnover and rotating coverage; build processes that work when your “super user” is out.

Quick gut-check: If the site needs a cheat sheet to remember how to run a standard visit, your workflow is too complex.

2) Pitfall: Mid-study amendments that create data chaos

What it looks like

- A protocol amendment changes a schedule, adds a questionnaire, modifies wording, or alters completion windows.

- Some patients/sites are on “old” versions while others are on “new,” leading to reconciliation headaches.

- Confusion about what changed, when it goes live, and what’s expected operationally (not just in the protocol text).

Protocol and version changes can impact data management plans and require updates across the study life cycle (amendments, version updates, reconciliation).

How to avoid it

- Treat amendments like releases. Build a formal “eCOA change package” (not just updated screenshots): what changed, why, who it impacts, go-live timing by country/site, and what to do for each patient scenario (already enrolled vs new).

- Bake in UAT for amendments, not only initial go-live. If eCOA feeds into EDC or requires mapping, test transfers and mapping after changes, not just at the start.

- Control versioning ruthlessly. Use clear naming and effective dates; document rules for mixed-version cohorts.If wording, response scales, or completion windows change, assess measurement comparability and document how mixed-version data will be interpreted and pooled (if at all).

- Communicate in the site’s language. A one-page “What’s changing at the visit level?” beats a 40-page amendment summary every time.

- Don’t skip the operational translation. Vendor case studies regularly highlight that the written amendment often misses operational requirements that must be implemented correctly inside eCOA.

Quick gut-check: If you can’t explain the amendment impact in 60 seconds to a coordinator, it isn’t ready to deploy.

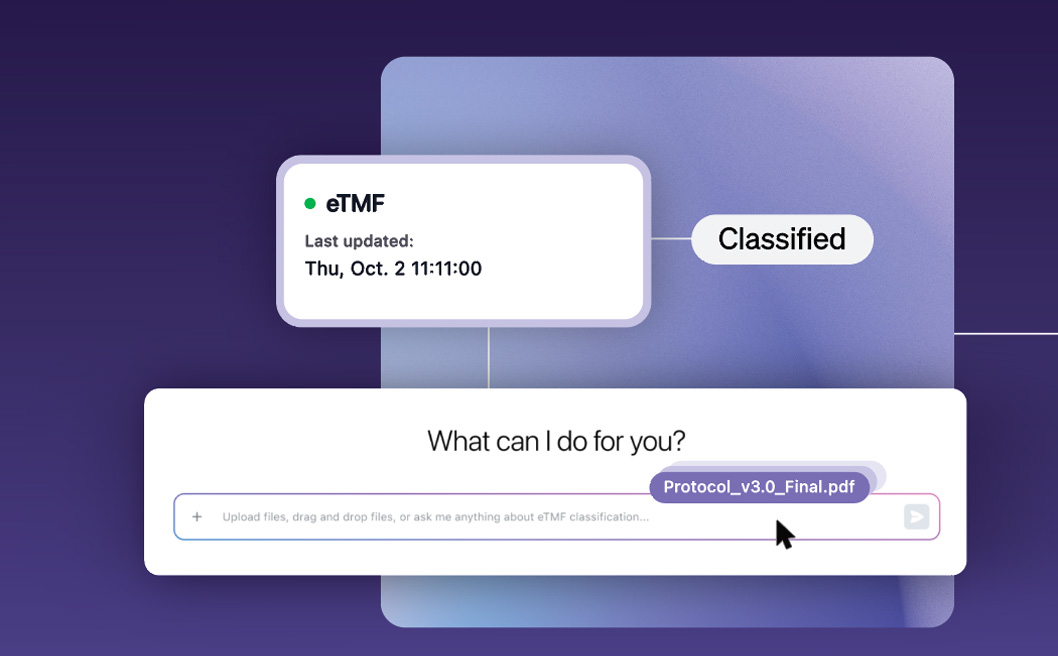

Medable Studio was built, in part, to help sponsors avoid this pitfall. Medable Studio enables teams to manage amendments like true product releases by clearly defining what changed, who it impacts, and when and how it goes live by country, site, or patient cohort. Built-in version control, effective dating, and amendment-specific UAT ensure changes are validated end to end, including downstream integrations such as EDC and data mappings. With intuitive tooling that translates protocol updates into visit-level, site-ready execution, Medable Studio removes ambiguity, protects data integrity across mixed versions, and keeps studies operationally aligned as they evolve mid-study.

3) Pitfall: Device logistics that derail timelines and compliance

What it looks like

- Devices arrive late, can’t clear customs, don’t have the right language pack, or don’t match country power standards.

- Sites can’t maintain charging, storage, and chain-of-custody processes.

- Replacement workflows are unclear (lost, stolen, broken, OS update failures).

- Participants struggle with BYOD (notifications, OS compatibility, changing phones) or provisioned devices (carrying a second phone).

Even high-level implementation guidance consistently calls out how complex device distribution, site training, and ongoing support become, especially in global trials.

How to avoid it

- Choose your provisioning model intentionally (BYOD vs provisioned vs hybrid). Hybrid often reduces exclusion while preserving consistency, but it must be planned, not improvised.

- Write a “device playbook” before first-patient-in:

- shipping and depots (by region)

- customs/import assumptions

- spares strategy (per site/region)

- replacement SLAs

- offline use expectations and sync behavior

- accessory needs (chargers, cases, screen protectors)

- Operationalize chain-of-custody. Make it easy for sites to track: received → assigned → returned → quarantined → reissued (or retired).

- Plan for tech reality: OS updates, Wi-Fi constraints, clinic connectivity, and power availability, then test in conditions that look like real sites, not office Wi-Fi.

Quick gut-check: If you don’t know how a site gets a replacement device within an agreed SLA for that region/study, you’re planning for protocol deviations.

4) Pitfall: Training gaps that show up as “data issues”

What it looks like

- Sites forget setup steps after a long startup-to-first-patient gap.

- New staff join after site initiation and never get fully trained.

- Participants get inconsistent instructions (“do it at home” vs “do it here”), creating missingness or timestamp problems.

- Helpdesk becomes the default trainer.

Regulators expect electronic data to meet core data integrity principles (often summarized as ALCOA+: attributable, legible, contemporaneous, original, accurate, plus complete, consistent, enduring, and available), and that depends heavily on validated processes and proper controls.

How to avoid it

- Train to tasks, not features. Build role-based modules: coordinator workflow, investigator oversight, CRA monitoring, patient onboarding.

- Use micro-training + refreshers. 5–10 minute “how to do X” content beats a single 90-minute session everyone forgets.

- Create a retraining trigger list: protocol amendment, screen changes, new questionnaire, high turnover, a site’s missingness threshold, repeated helpdesk tickets.

- Standardize participant onboarding. Provide scripted instructions and a simple one-pager (what to do, when to do it, what to do if it fails).

- Measure training effectiveness. Track early compliance, time-to-first-entry, helpdesk ticket categories, and repeat issues by site, then intervene quickly.

Quick gut-check: If your first signal of training failure is a data review listing, you found it too late.

Learn how Medable better prepares sites for clinical trials.

5) Pitfall: Instrument licensing and migration timelines that stall go-live

What it looks like

- A sponsor selects a COA/PRO instrument late in the process, assuming it can be “built quickly” in eCOA.

- Licensing approval (and fees) take longer than expected, especially when multiple countries, languages, or vendors are involved.

- The instrument owner requires review/approval of screenshots, formatting, or migration evidence before deployment.

- Translations lag behind startup timelines, or the team discovers late that linguistic validation/cultural adaptation is required.

- A “simple wording change” turns into a compliance risk because it violates instrument copyright or breaks equivalence.

How to avoid it

- Start licensing early. Confirm instrument ownership, licensing requirements, fees, and approval timelines before finalizing build timelines.

- Treat migration like a controlled deliverable. Plan for instrument owner review of formatting, response options, instructions, and screen flows (and don’t assume a PDF copy/paste is acceptable).

- Build a translation plan that matches the protocol footprint. Confirm required languages, regional variants, and whether linguistic validation is needed, then align those timelines with UAT and release readiness.

- Lock down “no-touch” elements. Make it clear which text/formatting cannot be modified without approval (instructions, recall period wording, response scale anchors).

- Keep documentation audit-ready. Maintain a simple package showing licensing status, migration decisions, approvals, and version history so teams aren’t scrambling mid-study.

Quick gut-check: If licensing, translations, and instrument-owner approvals aren’t confirmed before build finalization, your “Go-live date” is at risk.

Closing thoughts: A practical “pitfall prevention” checklist

Most eCOA “failures” are operational, not technical.

Before go-live, make sure you can answer “yes” to these:

- Site burden: Have we mapped the end-to-end visit workflow and eliminated unnecessary steps?

- Amendments: Do we have a repeatable release process (impact assessment, UAT, comms, version control) for eCOA changes?

- Device logistics: Do we have depots/spares/replacement SLAs and a documented chain-of-custody flow?

- Training: Do we have role-based training, refreshers, and triggers for retraining, plus simple participant onboarding?

- Licensing: Have we confirmed instrument licenses, migration approvals (as required), and translations before build freeze?

If you build for real-world variability up front, you’ll spend far less time firefighting later.