According to Gartner, a request for information, or a request for proposal, is defined as “both the process and documentation used in soliciting bids for potential business or IT solutions required by an enterprise or government agency. The RFI document typically outlines a statement of requirements (SOR) to be met by prospective respondents wishing to make a bid to deliver the required solutions. It might cover products and/or services to meet the given requirements.”

Yet, for anyone entering into a long-term business agreement, a well-written RFI can do so much more than just assess and collect vendor capabilities.

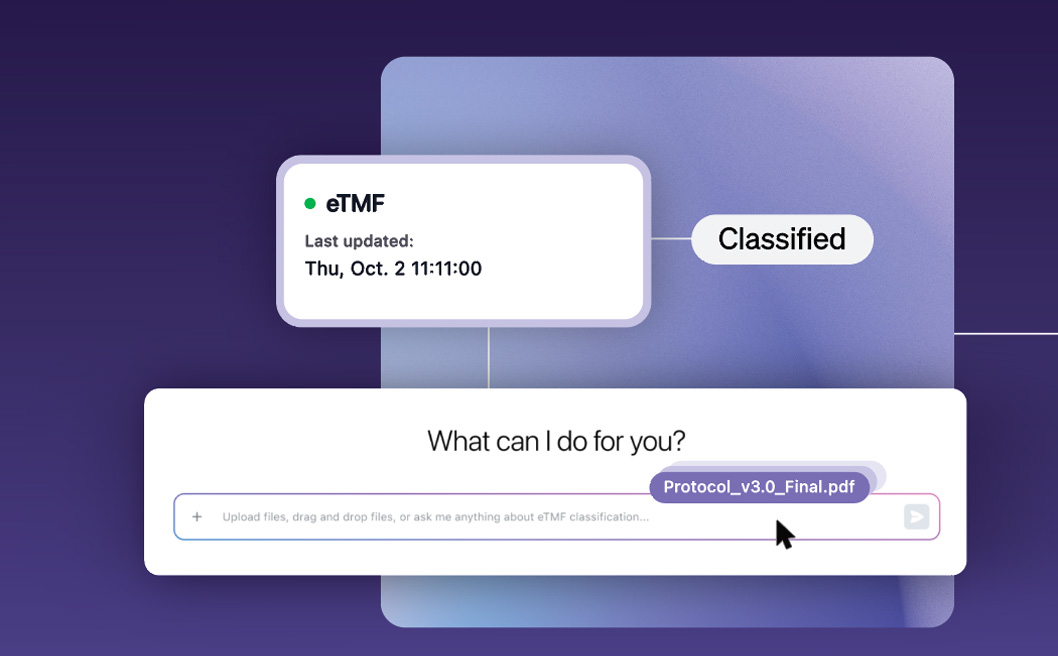

For the last decade, Medable has been transforming the capabilities of organizations across clinical research using the latest in new technologies. In this time, we’ve learned the best RFIs are able to define what success looks like, create alignment on measurable outcomes, and establish accountability on roles and responsibilities well before a contract is ever signed. When done correctly, it becomes a decision-making framework that offers clear vision to both organizations.

Recently, Medable received two RFIs around eCOA from top pharmaceutical organizations. They stood out to us because they were structured around performance, not promises, a distinction that makes all the difference.

The difference between good and bad framing

Not all RFIs are created equal. Strong documents go beyond surface-level questions, instead asking how a capability is configured and validated. They also define how it scales across global deployments, and what controls exist around change management. Measurable results that past clients have achieved are key here as the focus shifts from “Do you have it?” to “How well does it perform, under what conditions, and how is/was that measured?”

Why key performance indicators (KPIs) change the quality of vendor responses

When an RFI requires measurable metrics, the tone of vendor responses changes as language is framed around explaining and defining real-world operational data.

Simply put, instead of stating that they provide best-in-class uptime, vendors must disclose actual uptime over the past twelve months and specify the SLA that supports it. Or, instead of describing robust implementation support, they must report the percentage of implementations delivered on schedule and the average time to go live.

When you request metrics such as uptime percentages, mean time to resolution, implementation cycle times, participant completion rates, or audit outcomes, you create clarity and comparability as responses can be evaluated side by side.

Strong RFIs design the evaluation

Another hallmark of effective RFIs is internal structure. The strongest examples embed evaluation logic directly into the document and ask thoughtful, delivery-based questions. Requirements are categorized clearly, essential capabilities are distinguished from optional enhancements, and scoring frameworks are defined before vendor responses arrive.

This approach reduces bias and improves governance while strengthening defensibility, which can be important in more regulated industries.

Metrics should extend beyond vendor selection

The best RFIs connect evaluation metrics to long-term vendor management, with implementation timelines, platform reliability, support responsiveness, adoption rates, and compliance history serving as useful measures for continued performance review.

By requesting historical performance data at the outset, organizations establish a baseline. This informs service level agreements, quarterly business reviews, and renewal discussions and is proactive in creating claer accountability.

eCOA standards and KPIs to include in your next RFI

Implementation performance KPIs

Average study startup timeline

This measures how long it takes from contract execution to go-live. It directly affects time to first patient in and overall trial velocity.

Percentage of implementations delivered on schedule

A maturity indicator. It reveals whether timelines are aspirational or consistently achieved.

Average configuration cycle time for mid-study amendments

Amendments are inevitable. This KPI shows operational agility and how disruptive protocol changes will be.

Time to database lock or study closeout support benchmarks

Indicates downstream efficiency and the vendor’s ability to support end-of-study milestones.

Platform reliability and technical KPIs

Historical uptime percentage with SLA backing

Not just a promised SLA, but actual trailing twelve-month performance. This separates contractual guarantees from operational reality.

Mean time to resolution (MTTR) for critical incidents

Reveals how quickly serious issues are resolved, not just acknowledged.

Disaster recovery recovery time objective (RTO) and recovery point objective (RPO)

Critical for regulated environments where data integrity and availability are non-negotiable.

Release frequency and regression defect rates

Indicates engineering maturity and change management discipline.

Quality and compliance KPIs

Audit findings history related to the platform

Provides insight into regulatory exposure and inspection readiness.

Validation methodology and frequency of re-validation

Shows how rigorously the system is maintained in validated states.

Data query rate or data correction rate per study

A practical proxy for data quality and usability.

Inspection outcomes involving the platform

Real-world evidence of regulatory scrutiny and system resilience.

Adoption and user experience KPIs

Participant completion rates for eCOA or digital workflows

Directly tied to data completeness and study quality.

User training time to proficiency

Indicates usability and implementation burden.

Participant dropout rates attributable to technology friction

A powerful metric that connects platform design to real-world retention.

User satisfaction scores across sponsors, sites, and participants

A balanced signal of ecosystem performance.

Support and operational KPIs

First response time by severity level

Clarifies responsiveness expectations.

Escalation resolution timeframes

Measures accountability beyond frontline support.

Support ticket volume trends per active study

Helps identify systemic issues or scalability concerns.

Dedicated account management structure and client-to-manager ratio

Signals how much strategic oversight clients actually receive.

Why these KPIs stand out

What makes these KPIs particularly strong is that they are:

- Outcome-oriented rather than feature-oriented

- Historically measurable rather than aspirational

- Operationally revealing rather than marketing-driven

- Useful beyond vendor selection, since they can feed into SLAs and QBR scorecards

Most importantly, they connect platform capability to business impact. They measure speed, quality, reliability, compliance, and adoption, which are the levers that determine whether a technology investment succeeds or struggles.

A simple change that makes a meaningful difference

Good RFIs are often sharp RFIs. They succintly ask abuot quantifiable performance.

At minimum, every document should clearly define:

- What operational success looks like

- Which metrics will measure that success

- What evidence vendors must provide

- How responses will be evaluated

When these elements are present, vendor selection becomes more predictable, more defensible, and more closely aligned to long-term results.

Final thought, RFIs signal organizational direction

In competitive evaluations, the strongest storyteller is not always the strongest performer. If KPIs, metrics, and the right questions are not defined early, performance risk can be real. A document that demands measurable outcomes, structured responses, and operational transparency signals that an organization values accountability.

When measurable expectations are built into your RFI or RFI from the start, organizations significantly increase the likelihood that the partner you select can deliver not only features, but outcomes.

Outcomes, ultimately, are what matter in bringing more treatments to patients faster.